Abstract

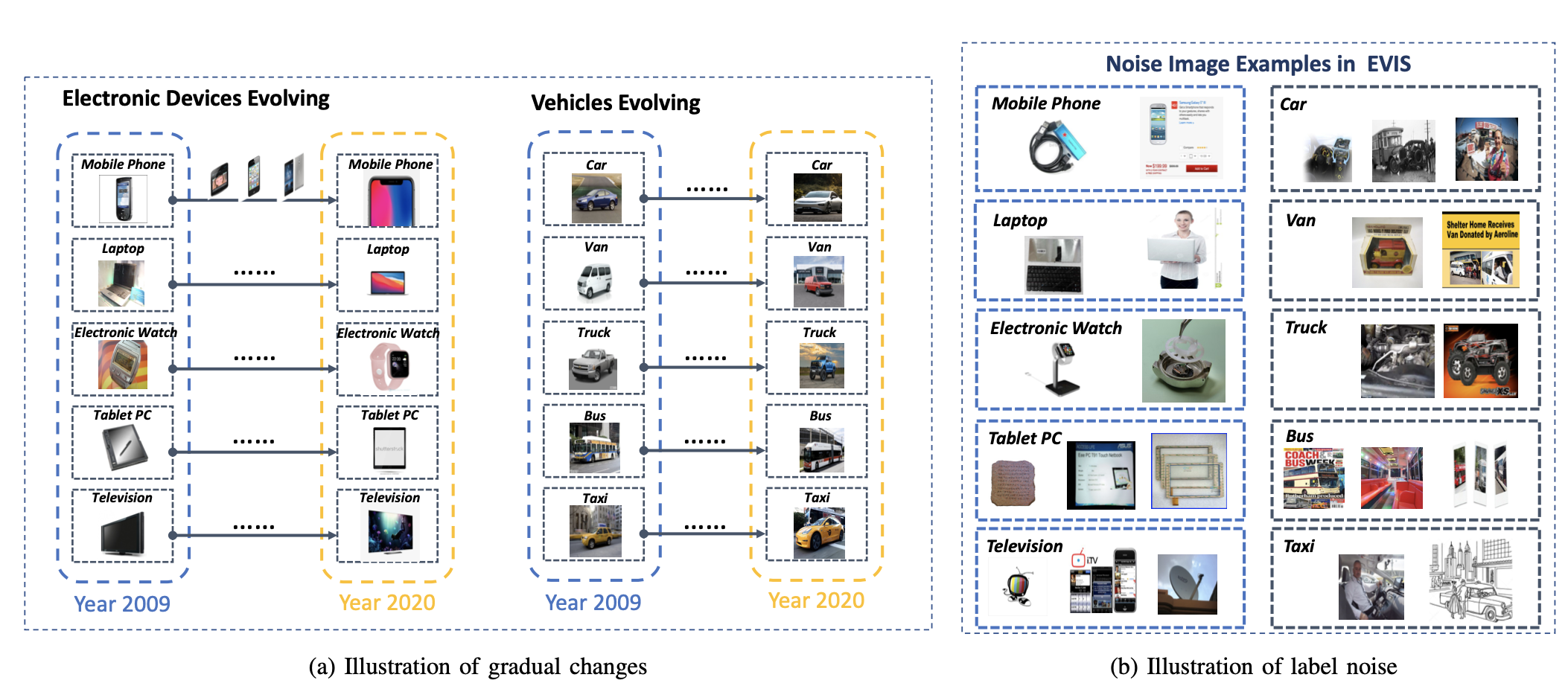

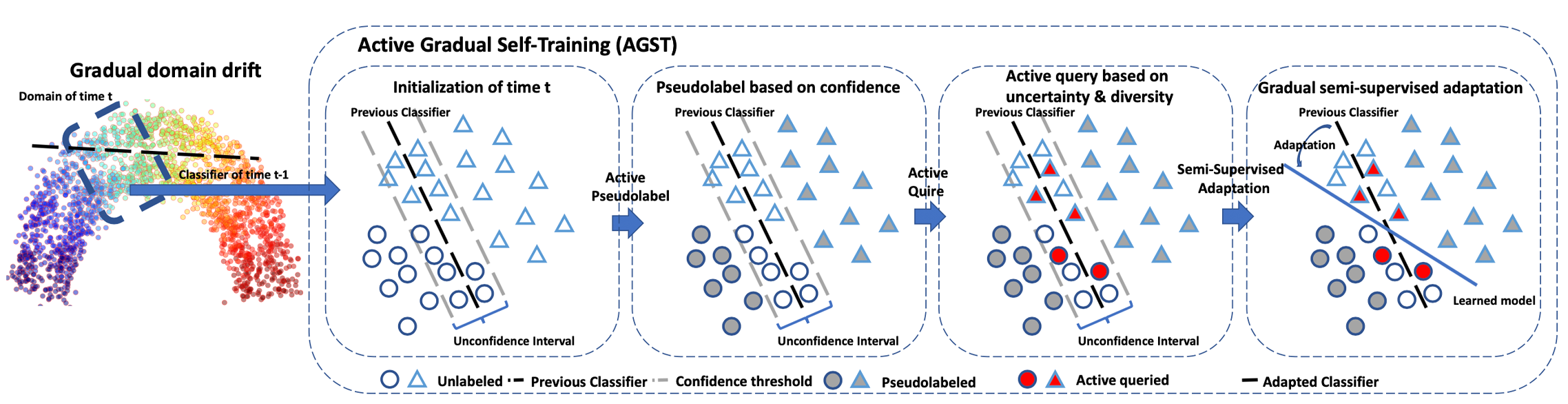

Adapting deep neural networks to changing environments is critical in practical utility, especially for online web applications, where the model has access to some labeled data from the source domain, unlabeled data, and limited labels from a gradually changing target domain. In this paper, we deal with such problem via active gradual domain adaptation, where the learner continually and actively selects the most informative labels from the target to enhance the label efficiency and utilizes both labeled and unlabeled samples to improve the model adaptation under gradual domain drift. We propose the active gradual self-training (AGST) algorithm with the novel designs of active pseudolabeling and gradual semi-supervised domain adaptation. Specifically, AGST pseudolabels the samples with high confidence, and selects the most informative labels from the unconfident samples by both uncertainty and diversity, then gradually self-trains itself by confident pseudolabels, active queried informative labels, and data features. In our experiment, we create a new dataset -- Evolving-Image-Search (EVIS) collected from the web search engine with gradual domain drift. The experiment results on synthetic dataset, real-world dataset and EVIS dataset show that AGST achieves up to 62\% accuracy improvement against unsupervised gradual self-training with only 5\% additional labels, and 19\% than directly applying CLUE, which demonstrated the effectiveness of the designs of active pseudolabel and gradual semi-supervised domain adaptation.

Presentation

Code and Models

Citation

@ARTICLE{Zhou2021,

author={Zhou, Shiji and Wang, Lianzhe and Zhang, Shanghang and Wang, Zhi and Zhu, Wenwu},

journal={IEEE Transactions on Multimedia},

title={Active Gradual Domain Adaptation: Dataset and Approach},

year={2022},

pages={1-1},

doi={10.1109/TMM.2022.3142524}

}